MADRL总结

MADRL总结

Sheldon ZhengMADRL

Key Value 文献类型 journalArticle 标题 Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications 中文标题 多Agent系统的深度强化学习:挑战、解决方案和应用综述 作者 [[Thanh Thi Nguyen]]、 [[Ngoc Duy Nguyen]]、 [[Saeid Nahavandi]] 期刊名称 [[IEEE Transactions on Cybernetics]] DOI 10.1109/TCYB.2020.2977374 引用次数 281 📊 影响因子 19.118 (Q1) 条目链接 My Library PDF 附件 2020__Nguyen et al__Deep Reinforcement Learning for Multiagent Systems.pdf

Abstract

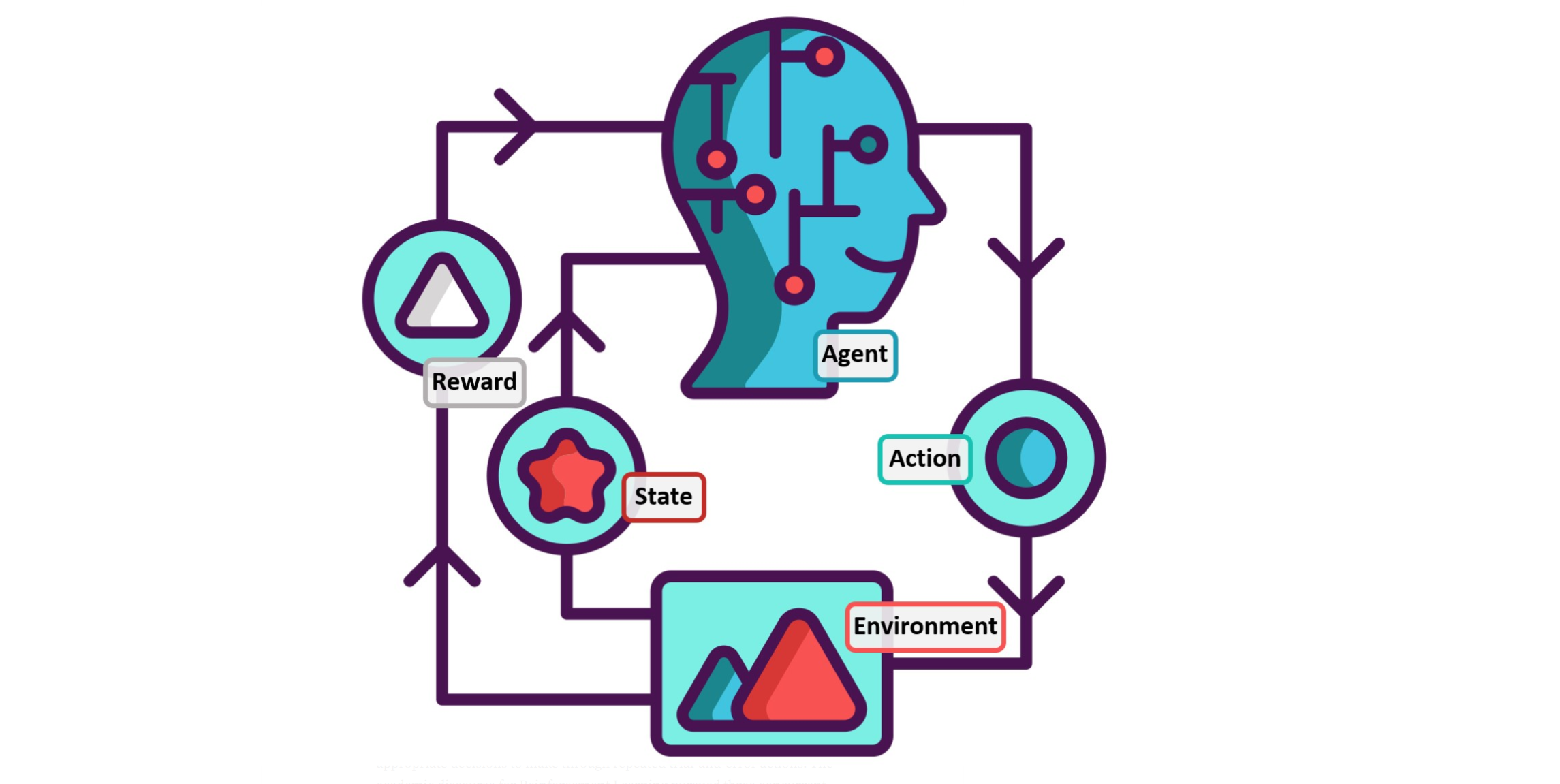

Reinforcement learning (RL) algorithms have been around for decades and employed to solve various sequential decision-making problems. These algorithms, however, have faced great challenges when dealing with high-dimensional environments. The recent development of deep learning has enabled RL methods to drive optimal policies for sophisticated and capable agents, which can perform efficiently in these challenging environments. This article addresses an important aspect of deep RL related to situations that require multiple agents to communicate and cooperate to solve complex tasks. A survey of different approaches to problems related to multiagent deep RL (MADRL) is presented, including nonstationarity, partial observability, continuous state and action spaces, multiagent training schemes, and multiagent transfer learning. The merits and demerits of the reviewed methods will be analyzed and discussed with their corresponding applications explored. It is envisaged that this review provides insights about various MADRL methods and can lead to the future development of more robust and highly useful multiagent learning methods for solving real-world problems.

【摘要翻译】强化学习(RL)算法已经出现了几十年,并用于解决各种顺序决策问题。然而,这些算法在处理高维环境时面临着巨大的挑战。深度学习的最新发展使RL方法能够为复杂和有能力的代理驱动最优策略,这些代理可以在这些具有挑战性的环境中高效地执行。本文讨论了深度RL的一个重要方面,涉及到需要多个代理进行通信和协作以解决复杂任务的情况。综述了与多智能体深度RL(MADRL)相关问题的不同方法,包括非平稳性、部分可观测性、连续状态和动作空间、多智能体训练方案和多智能体转移学习。本文将分析和讨论这些方法的优缺点,并探讨它们的相应应用。据设想,这篇综述提供了关于各种MADRL方法的见解,并可导致未来发展更健壮、更有用的多智能体学习方法,用于解决现实问题。

Single Agent Deep RL

Actor-Critic

“The actor structure is used to select a suitable action according to the observed state and transfer to the critic structure for evaluation.”

行动者结构用于根据观察到的状态选择合适的行动,并转移到批评家结构进行评估。

“Deep Q-Network”

DQN使用Bellman公式来定义最小化损失函数:

\(\mathcal{L}(\beta)=\mathbb{E}\left[\left(r+\gamma \max _{a^{\prime}} Q\left(s^{\prime}, a^{\prime} \mid \beta^{\prime}\right)-Q(s, a \mid \beta)\right)^2\right]\)

DQN的缺陷:

“However, using a neural network to approximate value function is proved to be unstable and may result in divergence due to the bias originated from correlative samples”

然而,使用神经网络逼近值函数被证明是不稳定的,并且由于样本相关性所产生的偏差可能导致发散

解决方案:

设置一个experience replay memory来打断样本之间的关联

“Double DQN (DDQN)” 双DQN

“The idea of DDQN is to separate the selection of “greedy” action from action evaluation.”

DDQN的思想是将“贪婪”行为的选择与行为评估分开。

\(\mathcal{L}_{\mathrm{DDQN}}(\beta)=\mathbb{E}\left[\left(r+\gamma Q\left(s^{\prime}, \arg \max _{a^{\prime}} Q\left(s^{\prime}, a^{\prime} \mid \beta\right) \mid \beta^{\prime}\right)-Q(s, a \mid \beta)\right)^2\right]\)

关键区别在于\(\beta\) 在公式里面是不带撇的,意思是使用当前的参数来估计一个最大\(a^`\) 得到的最大Q值。

问题:

“However, the fact that selecting randomly samples from the experience replay does not completely separate the sample data”

“然而,从经验回放中随机选择样本并不能完全分离样本数据”

方案:prioritized experience replay

\(p_i=\left|\delta_i\right|=\left|r_i+\gamma \max _a Q\left(s_i, a \mid \beta^{\prime}\right)-Q\left(s_{i-1}, a_{i-1} \mid \beta\right)\right|\)

采用Q值之间的绝对TD差作为样本的随机抽取优先级。

“Dueling network” 决斗网络

DQN策略选取会遇到一个问题就是又是会产生多个候选情况,而这两者都看起来没有负面作用,但是在之后的过程中其中某些会产生问题。作者在这里用了一个比喻:

“when driving a car and there are no obstables ahead, we can follow either the left lane or the right lane. If there is an obstacle ahead in the left lane, we must be in the right lane to avoid crashing. Therefore, it is more efficient if we focus only on the road and obstacles ahead.”

当开车时,前面没有障碍物,我们可以走左车道或右车道。如果前面的左车道有障碍物,我们必须在右车道以避免撞车。因此,如果我们只关注前面的道路和障碍,效率会更高。

“In the dueling architecture, there are two collateral networks that coexist: one network, parameterized by θ , estimates the state-value function V(s|θ) and the other one, parameterized by θ ′, estimates the advantage action function A(s, a|θ ′).”

决斗网络就是采用状态-值函数V和优势函数A来共同构成Q函数,这样不但使用了状态值,还对不同的状态-动作进行了优势评估,综合决定Q函数。

\(\begin{aligned} Q\left(s, a \mid \theta, \theta^{\prime}\right)=& V(s \mid \theta) \\ &+\left(A\left(s, a \mid \theta^{\prime}\right)-\frac{1}{\left|\Delta_\pi\right|} \sum_{a^{\prime}} A\left(s, a^{\prime} \mid \theta^{\prime}\right)\right) \end{aligned}\)

“Deep recurrent Q-network (DRQN)” 深度递归Q网络

“These games are often called partially observable MDP problems. The straightforward solution is to replace the fully connected layer right after the last convolutional layer of the policy network with a recurrent long shortterm memory,”

DQN的另一个缺点是它使用四个帧的历史作为策略网络的输入。因此,DQN在解决当前状态依赖于大量历史信息(如“双灌篮”或“冻伤”游戏)的问题时效率低下,这些游戏通常被称为部分可观察的MDP问题。直截了当的解决方案是将策略网络最后一个卷积层之后的完全连接层替换为经常性的长短期记忆

“Deep attention recurrent Q-network (DARQN)” 深度关注复发Q网络(DARQN)

“Sorokin et al. added attention mechanism into DRQN so that the network can focus only on important regions in the game, allowing smaller network’s parameters and hence speeding the training process.”

“Sorokin等人在DRQN中添加了注意力机制,这样网络就可以只关注游戏中的重要区域,从而允许较小的网络参数,从而加快训练过程。

Multi-agent Deep RL

“Nonstationarity” :非平稳性

“Learning among the agents is complex because all agents potentially interact with each other and learn concurrently. The interactions among multiple agents constantly reshape the environment and lead to nonstationarity.”

智能体之间的学习很复杂,因为所有智能体都可能相互交互并同时学习。多个智能体之间的交互不断重塑环境并导致非平稳性(nonstationarity)的产生。

在这种情况下,智能体之间的学习有时会导致代理的策略发生变化,并可能影响其他智能体的最优策略。对一个动作的潜在回报的估计是不准确的,因此,在多智能体环境中的给定点上,好的政策在未来不可能保持不变。

“The convergence theory of Q-learning applied in a single-agent setting is not guaranteed to most multiagent problems as the Markov property does not hold anymore in the nonstationary environment”

由于马尔可夫特性在非平稳环境中不再成立,因此在单智能体环境中应用的Q学习收敛理论不能保证适用于大多数多智能体问题.

“deep repeated update Q-network (DRUQN)” 深度重复更新Q网络

“deep loosely coupled Q-network (DLCQN)” 深度松耦合Q网络

“DLCQN relies on the loosely coupled Q-learning proposed in [52], which specifies and adjusts an independence degree for each agent using its negative rewards and observations. Through this independence degree, the agent learns to decide whether it needs to act independently or cooperate with other agents in different circumstances.”

DLCQN依赖于[52]中提出的松散耦合Q-学习,该学习算法使用其负面奖励和观察结果为每个智能体指定和调整独立度。通过这种独立程度,智能体学会在不同的情况下决定是否需要独立行动或与其他智能体合作。

“lenient-DQN (LDQN)” 仁慈DQN(LDQN)

“Leniency in the context of a multiagent setting describes the situation where a learning agent ignores the poor actions of a co-learner, which leads to low rewards, and still cooperates with the co-learner with the hope that the co-learner can improve his actions in the future.”

在多智能体环境中,宽容是指learning智能体忽略了合作学习者的不良行为,从而导致低回报,但是仍然与合作学习者合作,希望合作学习者能够在未来改进其行为的情况。

“hystereticDQN (HDQN)” 滞后DQN(HDQN)

“The experimental results demonstrate the superiority of LDQN against HDQN in terms of convergence to optimal policies in a stochastic reward environment.”

“weighted DDQN” 加权DDQN

“The experiments show the better performance of WDDQN against the DDQN in two multiagent environments with stochastic rewards and large state space.”

实验表明,WDDQN在两个具有随机奖励和大状态空间的多智能体环境中对DDQN具有更好的性能。

LDQN>HDQN

WDDQN>DDQN

“Partial Observability:”PMOD

DRQN

“Unlike DQN, DRQN approximates Q(o, a), which is a Q-function with an observation o and an action a, by a recurrent neural network. DRQN treats a hidden state of the network \(h_{t−1}\) as an internal state.”

与DQN不同,DRQN通过递归神经网络近似于Q(o,a),这是一个具有观测值o和动作a的Q函数。DRQN处理网络的隐藏状态ht−1作为内部状态。

“With the recurrent structure, the DRQN-based agents are able to learn the improved policy in a robust sense in the partially observable environment”

“Deep distributed recurrent Q-network (DDRQN)” 深分布递归Q网络

“The success of DDRQN is relied on three notable features, that is, last-action inputs, interagent weight sharing, and disabling experience replay”

last-action inputs:

“last-action inputs, requires the provision of the previous action of each agent as input to its next step”

interagent weight sharing:

“The interagent weight sharing means that all agents use weights of only one network, which is learned during the training process.”

disabling experience replay:

“The disabling experience replay simply excludes the experience replay feature of DQN”

“The DDRQN therefore learns a Q-function of the form \(Q(o^t_m, h^m_{t-1}, m, a^m_{t−1}, a^m_t; θi)\), where each agent receives its own index m as the input. Weight sharing decreases learning time because it reduces the number of parameters to be learned.”

因此,DDRQN学习\(Q(o^t_m, h^m_{t-1}, m, a^m_{t−1}, a^m_t; θi)\)形式的Q函数,其中每个智能体接收自己的索引m作为输入。权重共享减少了学习时间,因为它减少了要学习的参数的数量。

问题:

“Although each agent has a different observation and hidden state, this approach, however, assumes that agents have the same set of actions.”

DDRQN假设所有的agent都有相同的动作集,这在现实中某些复杂场景中是不太适用的。

“Deep policy inference Q-network (DPIQN)” 深度策略推理Q网络

“eep recurrent policy inference Q-network (DRPIQN)” 深度循环策略推理Q网络(DRPIQN)

“Both DPIQN and DRPIQN are learned by adapting the network’s attention to policy features and their own Q-values at various stages of the training process.”

“Multitask MARL (MT-MARL)” 多任务MARL(MT-MARL)

“multitask MARL (MT-MARL) that integrates hysteretic learners [62], DRQNs [44], distillation [63], and concurrent experience replay trajectories (CERTs),”

“which are a decentralized extension of experience replay strategy proposed in [6]. The agents are not explicitly provided with task identity (thus partial observability) while they cooperatively learn to complete a set of decentralized POMDP tasks with sparse rewards.”这是[6]中提出的经验重放策略的分散扩展。当智能体协作学习完成一组分散的POMDP任务时,没有明确地向其提供任务身份(因此是部分可观察性),并且回报很少。

“MADDPG-M”

“Deep deterministic policy gradient (DDPG)” 深度确定性策略梯度

“Agents need to decide whether their observations are informative to share with other agents and the communication policies are learned concurrently with the main policies through experience”

“Bayesian action decoder (BAD)” 贝叶斯动作解码器(BAD)

“BAD relies on a factorized and approximate belief state to discover conventions to enable agents to learn optimal policies efficiently. This is closely relevant to theory of mind that humans normally use to interpret others’ actions.”

BAD依赖于一个分解的近似信念状态来发现约定,从而使代理能够有效地学习最优策略。这与人类通常用来解释他人行为的心理理论密切相关。

“The experimental results on a proof-of-principle two-step matrix game and the cooperative partial-information card game Hanabi demonstrate the efficiency and superiority of the proposed method against traditional policy gradient algorithms”

“原理证明两步矩阵游戏和合作部分信息卡游戏Hanabi的实验结果表明,相对于传统的策略梯度算法,该方法具有效率和优越性”

“MAS Training Schemes”

“CLDE Centralized Learning and Decentralized Execution” 集中学习和分散执行

centralized learning:

where a group of agents can be trained simultaneously by applying a centralized method via an open communication channel

decentralized execution:

Decentralized policies where each agent can take actions based on its local observations have an advantage under partial observability and in limited communications during execution.

centralized learning

concurrent learning 并行学习

并行学习是从自己的观察进行学习,而集中学习是从共同观察经验中进行学习。

centralized learning 和 concurrent learning 的区别:

“Centralized policy attempts to obtain a joint action from joint observations of all agents while the concurrent learning trains agents simultaneously using the joint reward signal.”

集中式策略试图从所有代理的联合观察中获得联合行动,同时并行学习使用联合奖励信号同时训练智能体。

“parameter sharing” 参数共享

“scheme allows agents to be trained simultaneously using the experiences of all agents although each agent can obtain unique observations.”

即便每一个智能体都有自己独立的观察,但是参数共享可以同时对多个智能体进行训练,因此可以将单个智能体的深度学习拓展到多智能体

“Parameter Sharing and TRPO” 参数共享和TRPO

“Reinforced interagent learning (RIAL)” 强化交互学习(RIAL)

“deep Q-learning has a recurrent structure to address the partial observability issue, in which independent Q-learning offers individual agents to learn their own network parameters.”

“Differentiable interagent learning (DIAL)” 可微分交互学习(DIAL)

“DIAL pushes gradients from one agent to another through a channel, allowing end-to-end backpropagation across agents”

“Deep reinforcement opponent network (DRON)” 深加固对手网络

“encodes observation of the opponent agent into DQN to jointly learn a policy and behaviors of opponents without domain knowledge”将对手智能体的观察结果编码到DQN中,以共同学习对手的策略和行为,而无需领域知识

“Multiagent deep deterministic policy gradient (MADDPG)” 多代理深度确定性策略梯度(MADDPG)

“MADDPG features the centralized learning and decentralized execution paradigm in which the critic uses extra information to ease the training process while actors take actions based on their own local observations.”

“Counterfactual multiagent (COMA)” 反事实多智能体

“Unlike MADDPG [74], COMA can handle the multiagent credit assignment problem [76] where agents are difficult to work out their contribution to the team’s success from global rewards generated by joint actions in cooperative settings. COMA, however, has a disadvantage that focuses only on discrete action space while MADDPG is able to learn continuous policies effectively.”

与MADDPG不同,COMA可以处理多代理人信贷分配问题[76],代理人很难从合作环境中的联合行动产生的全球奖励中计算出他们对团队成功的贡献。然而,COMA有一个缺点,即只关注离散的行动空间,而MADDPG能够有效地学习连续策略。

“Continuous Action Spaces” 连续动作空间

DQN 的方法局限于有限空间动作空间内寻求解,即DQN旨在寻找具有最大动作值的动作,因此需要在连续动作(状态)空间的每一步进行迭代优化过程,将动作空间离散化是一个方法,但是这样会产生很多问题,比如纬度诅咒问题,动作数相对于自由度的指数级增长。

“Trust region policy optimization (TRPO)” 信任区域策略优化

“Deterministic policy gradient (DPG)” 确定性策略梯度

“Recurrent DPG (RDPG)” 经常性DPG(RDPG)

“PS-TRPO”

“This method is based on the foundation of TRPO so that it can deal with continuous action spaces effectively.”

“Transfer Learning for MADRL” MADRL的迁移学习

“Policy distillation” 政策蒸馏

“Progressive neural networks” 渐进式神经网络

“Actor-mimic method” 演员模拟法

MADRL Applications

“Sequential social dilemma (SSD) model” 顺序社会困境(SSD)模型

“Extension of matrix game social dilemma (MGSD)” 矩阵博弈社会困境的扩展

“swarm systems” 群系统

“Dueling DDQN (DDDQN)” Dueling DDQN(DDDQN)

“Asynchronous advantage AC (A3C)” 异步优势AC(A3C)

“CommNet model” CommNet模型

“Contextual deep Q-learning” 上下文深层Q-学习

“Contextual multiagent AC” 上下文多智能体AC

Future Challenges & Research Directions

“Imitation learning” 模仿学习

“imitation learning tries to map between states to actions as a supervised approach. It directly generalizes the expert strategy to unvisited states so that it is close to a multiclass classification problem in cases of finite action set.” 模仿学习作为一种有监督的方法,试图在状态和行为之间进行映射。它将专家策略直接推广到未访问的状态,也因此模仿学习较为接近于有限动作集情况下的多类分类问题。

“Inverse RL” 反向RL

“Inverse RL assumes that the expert policy is optimal regarding the unknown reward function” 反向强化学习假设关于未知回报函数的专家策略是最优的

“A very straightforward challenge arose from these applications is the requirement of multiple experts who are able to demonstrate the tasks collaboratively.”

这些应用程序产生的一个非常直接的挑战是需要多个能够协作演示任务的专家。

“Furthermore, the communication and reasoning capabilities of experts are difficult to be characterized and modeled by autonomous agents in the MAS domain.”

此外,在MAS领域中,专家的通信和推理能力很难被自主智能体识别和建模。

“In addition, for complex tasks or behaviors which are difficult for humans to demonstrate, there is a need for alternative methods that allow human preferences to be integrated into deep RL”

此外,对于人类难以演示的复杂任务或行为,需要替代方法,将人类偏好融入到深度RL中。

“Human-on-the-loop”

“In human-on-the-loop, agents execute their tasks autonomously until completion, with a human in a monitoring or supervisory role reserving the ability to intervene in operations carried out by agents.”

即人类作为监督者,由机器完全自主完成任务,人类只作为监督管理,保留干预其执行任务的能力

“Scaling to large systems”

Homogeneous agents 同质多智能体

“Since agents have common behaviors, such as actions, domain knowledge, and goals (homogeneous agents), the scalability can be achievable by (partially) centralized training and decentralized execution”

Heterogeneous agents 异质多智能体

“In the heterogeneous setting with many agents, the key challenge is how to provide the most optimal solution and maximize the task completion success based on self-learning with effective coordinative and cooperative strategies among the agents.”

方向、挑战总结:

- 将专家经验进行分类和归纳总结,让自主智能体能够识别并进行建模

- 将人类的互动和偏好和深度学习相结合

- 深度学习很难和人类现有的人机协作科技产生互动,人类很难处理大量的单调工作,而机器很难处理创造性工作